Quick Tip: Adding (Automatic) Open Captions to Your Webcam

TL;DR: If possible, add (closed) captions to your (live) audio/video content in order to make it more accessible and inclusive. Many tools, inclusing Zoom, have features allowing you to add closed captions. If you cannot provide closed captions, baked-in open captions are a viable alternative. While automatically generate captions still leave a lot to be desired, they are better than nothing.

The short video above is a recording of a Zoom call. In it, as you were able to see, I added open captions1 to my webcam video. The automatically generated captions are not provided via Zoom (see below), but part of my webcam video itself.

Before discussing how something like this can be achieved, I want to briefly discuss why I am experimenting with captions for (live) video.

The bottom line is that captions make content more accessible and more inclusive. I also want to stress that accessibility is not just about disability but more generally about making things usable by as many people as possible in the widest variety of contexts. To name a very simple example: Captions allow students to follow a lesson even if they do not have access to speakes or if their current environment does not allow for sound. In addition, captions have been shown (see, e.g., Vanderplank 2016) to help in foreign language contexts, for example, if you have language learners listening.

Unfortunately, and this goes beyond the topic of captions, a lot of inaccessible (or semi-accessible) content is still being created. In my role as an educator I am part of the problem - while I try to make my materials and activities as accessible and inclusive as possible, I still often fail to do so. I have provided my students with countless of barely accessible PDFs, videos without captions, podcasts without transcripts, and slides that require you to see in order to understand them fully. This is something I am actively trying to get better at!

I want to go back to the issue of captions. If we consider the WCAG 2.1 as a our guideline, captions are a must for prerecorded videos (Level A) and if taken seriously also for live content (Level AA). Luckily, providing captions for precorded videos is relatively straightforward - although it usually increases the workload quite a bit, even if relying on automated tools.

Providing captions for live content (e.g., a synchronous seminar session) is a bigger challenge as this usually requires transcribers who do the transcriptions (captions) live. This is also a good opportunity to point out that Zoom, and many other tools, provide various options for adding captions. Zoom, for example, allows you to assign participants to provide captioning, add third-party services, and even rely on in-built AI-powered live transcription.

Therefore, demonstrating open-captions using Zoom, as I did above, is actually not ideal as you should use these tightly integrated tools if available. That said, I have encountered many situation in which I was not able to use these tools to their fullest power and many tools also do not provide this functionality yet.

The solution I will describe below is for exactly these situations. If you cannot provide closed captions (CC), for example because you do not control the Zoom session, providing open captions is the next best thing.

One Solution: Automatic Open Captions Using OBS and Twitch Subtitles

Given everything that I have outlined above, we will now assume that we want to provide captions for a live session (e.g., a stream or a Zoom class) and we do not have the option of using tightly integrated closed captioning capabilities.

In this scenario, one viable options is to embedd open captions right into your webcam video, as I demonstrated in the short video above. If we have a look at this short clip, there is clearly at lot to be desired: There are a few minor mistakes in the transcription and the captions are, at least at times, hard to follow given how they are presented. This is due to the fact that I am relying on automatically generate captions and that I have a long way in terms of optimization in front of me. For now, I decided to use such systems despite their very obvious shortcommings - at the least this hightlights that accessibility technology IS something that exists.

It also seems necessary to point out that these captions do not really qualify in the WCAG sense as the guidelines define captions as conveying “not only the content of spoken dialogue, but also equivalents for non-dialogue audio information needed to understand the program content”. Technically speaking, what I am describing here are interlanguage dialogue-only subtitles.

Of course, automatically generated captions are a tricky subject as well. Even though their quality has increased drastically over the last years, they are still far from perfect and certainly sometimes do not help at all. That said, in many situations, despite the fact that having human-transcribed ones would be much better, they are the only viable options. Aside from quality considerations it should also be considered that automatically generated captions, as of today, usually rely on commercial services such as Google’s Cloud Speech-to-Text API. Futhermore, there is a risk of making this look easy and effertloss when in reality, given the current technology, automatically generated captions should be only considered to be a “better than nothing” solution. Of course, hopefully, this will change in the years to come!

As a sidenote, I also want to point out that the same technology can be used to provide translated captions (subtitles) in real time. While this adds a futher step of, possibly error introducing, automatation, having subtitles available allows more people to participate. While this, as automated transcription, is not perfect yet, maschine translation has made a gigantic leap in the last few years. In many cases, as can be seen, for example, on YouTube, automatically translated subtitles are already often good enough to understand a video.

Technical Solution

Finally we reached the point at which I am actually going to discuss how to achieve what you saw above: Adding automatically open captions to a webcam video!

Generally speaking, the solutions works as follows: Captions are generated using a web-application and added to an Open Broadcaster Software (OBS) scence. OBS is then used as a virtual webcam across applications (e.g., Zoom). Put very simply, using OBS, you can create video scenes, containing the video from your webcam, that you can then use as a virtual webcam in most other applications.

While there are various solutions for the first step, I am currently relying on Twitch Subtitles by PubNub (Stephen Blum). It’s a very simple and easily customizable solution. Also, the project, despite it’s reliance on PubNub, is available on GitHub and can be run locally.

Fortunately, the tutorial provided by PubNub is great and you should be able to quicky get everything up an running. Nevertheless, I want to briefly outline the process: You open the web-application in a Browser and it will transcribe your voice using the new Web Speech API. Using PubNub2:, you are automatically connected to a (private) channel. Everyone in that channel will see the generated captions in their browser. Then, you join that same channel using OBS’s Browser Source. Ultimately, OBS will simply add an overlay browser window, containing the open captions, to your video.

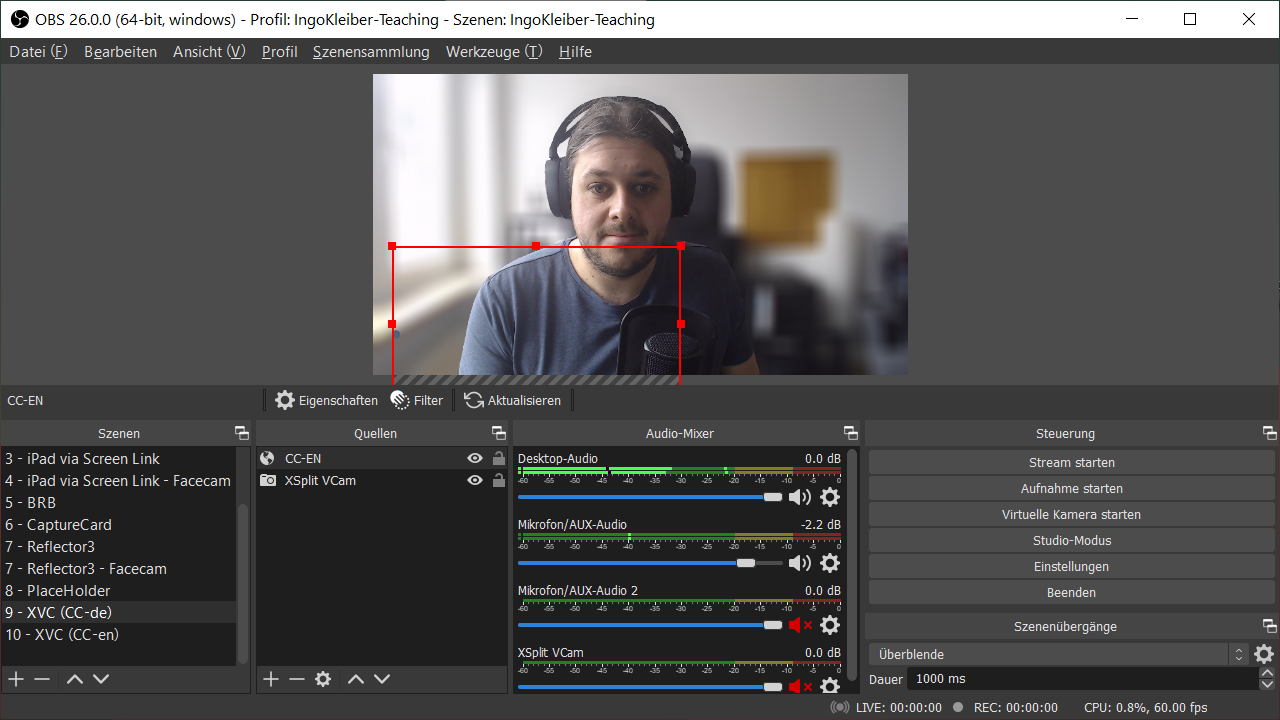

The red box is a browser window ultimately containing the captions. It is positioned so that the captions will appear in the bottom left corner of my video.

If you dislike how simplistic, and argualy also technical, this solution is, I would recommend having a look at Web Captioner. This is a very similar but more polished provider that also has various neat integrations with lots of platforms and tools (including Zoom, Twitch, and OBS).

BEWARE: In both cases, even if you run the actual software on your local maschine, it is extremely important to be aware of the fact that the transcriptions are being generated in the cloud. I would definitely not recommend to use this kind of automated transcription for any sensitive material! Also, do not auto-transcribe anyone elses voice without their consent - you would be effectively sending their voice data to Google without them knowing.

Open Captions vs. Closed Captions in Video Conferences

As I already discussed above, many video-conferecing solutions have tightly-integrated captioning functionalities. In contrast to open captions, which are ‘baked-in’ and cannot be controlled by viewers, they allow you to embed actual closed captions (CC) which can be adjusted and turned on or off by the viewers.

For example, Skype and Microsoft Teams support automatic real-time captions. In many cases, it is also possible to include captions, automatic or human-transcribed, to a video conference. Zoom, for example, allows you to have both participants or third-party services providing captions.

If possible, you should always opt for closed captions as they provide more flexibilty and you do not have to modify the video itself. However, if closed captions are not supported, open captions are a viable option.

-

I am using the term caption to refer to any kind of synchronized text alternative for audio content. Please note that the termnology is rather complex and often the terms (closed / open) captions and subtitles are used interchangeably. However, usually subtitles are meant for people able to hear the audio (e.g., translations) while captions are meant to ensure accessibility for viewers who cannot listen to the audio. Hence, captions also usually include information going beyond spoken dialogue. While so-called open captions are part of the video itself (‘baked-in’), closed captions can be configured as well as turned on and off by the viewers. Another terminological distinction is often made between interlingual subtitles (different language than is being spoken; i.e., a translation) and intralingual subtitles (same language as is being spoken). ↩

-

Technically, PubNub is a publish/subscribe messaging API. It it used to sync the captions between different browsers and application. ↩