Tracking and Inspecting Prompts of LangChain Agents Using Weights & Biases

Considering the (generative) AI space, (autonomous) agents are everywhere right now! Aside from ever more powerful and fortunately also open Large Language Models (LLMs), LangChain has become a staple of developing AI-driven applications and agents.

Langchain is a powerful and feature-rich open source framework for working with LLMs. As I have demonstrated in another article, it can, for example, be used to easily build question-answering systems using LLMs. However, another key feature of LangChain is the ability to create and use these so-called agents mentioned above. Put simply, they are applications that combine LLMs, other tools and interfaces (e.g., a browser or an API), as well as memory to solve complex tasks.

LLM-based agents usually use a series of prompts (based on prompt templates) to retrieve necessary data and information and prepare data as input for the tools at their disposal (see below). Of course, the LLM is also used to decide which tools to use for a given task in the first place. Autonomously choosing tools is done, for example, using the ReAct framework proposed by Yao et al. (2022).

When using and/or developing (autonomous) agents, it is crucial to understand how the agent uses the LLM, i.e., which prompts are being executed and their output. Understanding the prompts in play increases transparency and explainability and is crucial in debugging.

Furthermore, thinking about education, carefully considering the prompts generated and used by the agent(s) is necessary to understand both the decision-making process and the interactions with various tools as well as the data involved. Especially when using multiple tools and data sources in conjunction, understanding individual steps is a crucial component of being a competent user of such systems.

Hence, this article demonstrates how to track and inspect the prompts used by LangChain agents. After a brief introduction, we will first see how to inspect prompts using only LangChain before using Weights & Biases (W&B) to get a better and clearer insight into what is happening.

Some Background Information

Before looking at prompts, I will provide some background information on LangChain tools and Weights & Biases.

You can safely skip down if you have a basic understanding of Weights & Biases and how tools work in LangChain.

LangChain and Agent Tools 101

It is important to understand, at a basic level, how agents interact with tools. While this will lead us away from tracking and inspecting prompts for a minute, it will demonstrate why reviewing executed prompts is so essential.

According to the LangChain documentation, “[t]ools are functions that agents can use to interact with the world.” In the simplest case, a tool is a function that accepts a single string (query) and returns a string output. This allows the LLM to use interact with tools and, for example, information sources through language.

Here is a very simple example using a custom tool that can reverse strings. Using the @tool decorator, while being limited, currently is the simplest way of creating basic tools in LangChain.

@tool

def reverse_string(query: str) -> str:

'''Reverses a string.'''

return query[::-1]

llm = OpenAI(temperature=0)

tools = [reverse_string]

agent = initialize_agent(tools, llm, agent=AgentType.ZERO_SHOT_REACT_DESCRIPTION, verbose=True)

agent.run('What is LangChain in reverse?')

The docstring (“Reverses a string”) is crucial here as this will be passed to the LLM as information about what the tool is able to do.

When executed, LangChain uses the tool to reverse the string. While the task itself is incredibly boring, the fact that the agent can pick the right tool and apply it is very impressive.

> Entering new AgentExecutor chain...

I need to reverse the string

Action: reverse_string

Action Input: LangChain

Observation: niahCgnaL

Thought: I now know the final answer

Final Answer: niahCgnaL

> Finished chain.

Agent Output

The important bit is that everything is based on prompts and textual input and output. The agent, by means of the LLM, needs to figure out the input to the tool (i.e., the function) and work with its output. In this example, two prompts are being executed to solve the problem.

First, the agent is prompting the LLM with information regarding the tools available. As you can see, the docstring for reverse_string is used as part of the prompt to inform the LLM what the tool can be used for. If this was a more complex tool, we would need to provide additional information, e.g., with regard to the expected input format.

Answer the following questions as best you can. You have access to the following tools:

reverse_string: reverse_string(query: str) -> str - Reverses a string.

Use the following format:

Question: the input question you must answer

Thought: you should always think about what to do

Action: the action to take, should be one of [reverse_string]

Action Input: the input to the action

Observation: the result of the action

... (this Thought/Action/Action Input/Observation can repeat N times)

Thought: I now know the final answer

Final Answer: the final answer to the original input question

Begin!

Question: What is LangChain in reverse?

Thought:

Prompt

The LLM responds with the action (i.e., the tool to use) and the action input (i.e., the query for the function):

[Thought:] I need to reverse the string

Action: reverse_string

Action Input: LangChain

Completion / Agent Output

In the background, LangChain is now running the chosen tool using the “Action Input.”

Now, the second prompt that is being executed can use the result of reverse_string:

Answer the following questions as best you can. You have access to the following tools:

reverse_string: reverse_string(query: str) -> str - Reverses a string.

Use the following format:

Question: the input question you must answer

Thought: you should always think about what to do

Action: the action to take, should be one of [reverse_string]

Action Input: the input to the action

Observation: the result of the action

... (this Thought/Action/Action Input/Observation can repeat N times)

Thought: I now know the final answer

Final Answer: the final answer to the original input question

Begin!

Question: What is LangChain in reverse?

Thought: I need to reverse the string

Action: reverse_string

Action Input: LangChain

Observation: niahCgnaL

Thought:

Prompt

As expected, the LLM correctly returns:

[Thought:] I now know the final answer

Final Answer: niahCgnaL

Completion / Agent Output

Internally, LangChain is making heavy use of chains here. The essential addition in the context of agents is the tools that allow LangChain and the LLM to interact with the world.

Ultimately, seeing and understanding the prompts and their outputs is crucial in understanding how the tools are used and especially when debugging agents. For example, if the LLM produces “bad” input for the tool, we need to understand the prompts leading to that input.

Weights & Biases 101

Weights & Biases is a very powerful commercial MLOps platform that is free to use for personal projects.

While the cloud-based platform has many exciting features has many exciting features (also for prompt engineering), we will be using it to track our agent and display its prompts.

Put simply, we can add W&B (using the wandb library) to our existing Python scripts and start logging what happens in great detail. Fortunately, there is already a powerful integration between W&B and LangChain that we can leverage.

By default, all information will be stored locally (wandb folder) as well as in the cloud on W&B’s platform. This is also incredibly useful as we do not have to think about saving log files when iterating on our code.

Tracking and Inspecting Prompts of LangChain Agents

In the following, we will be working with a very simple LangChain agent as an example.

The agent is tasked with finding out when the European Song Contest first happened and how many years have passed since.

To do so, the agent will have access to two tools: wikipedia to find information on the internet and llm-math to do calculations using Python.

We will first look at the agent without any logging. Then we will use LangChain’s verbosity settings before moving on to Weights & Biases.

Notes on the Code and Models

In this example, we are using OpenAI’s text-davinci-003 model. That said, any reasonably powerful model would work for this particular example – using anything more powerful (e.g., gpt-4) would be a waste of resources.

Furthermore, we are using a zero-shot-react-description agent, which determines the tools to use based only on the description of the tool.

Two API keys (OpenAI and Weights & Biases) are necessary for these examples to work as they are. They are stored in a .env file:

OPENAI_API_KEY=XYZ

WANDB_API_KEY=XYZ

In Python, we use dotenv to make these available to us as follows:

from dotenv import load_dotenv

load_dotenv()As has become obvious, we are using the Python version of LangChain.

A Basic LangChain Agent

Let’s start with a very simple agent without any additional logging. In its simplest form, the agent looks like this:

from dotenv import load_dotenv

from langchain.agents import AgentType, initialize_agent, load_tools

from langchain.llms import OpenAI

# Load environment variables; especially the OpenAI API key

load_dotenv()

llm = OpenAI(model_name='text-davinci-003', temperature=0)

tools = load_tools(['wikipedia', 'llm-math'], llm=llm)

agent = initialize_agent(tools, llm, agent=AgentType.ZERO_SHOT_REACT_DESCRIPTION)

output = agent.run('When did the first Eurovision Song Contest take place? What is 2023 minus this year?')

print(output)Once the agent has finished, the output reads as follow:

The first Eurovision Song Contest took place in 1956 and 2023 minus this year is 67.

This is a perfectly correct answer to our question! However, we have no idea how we got there! Depending on your use case and situation, this may not be a problem at all!

We Need More Verbosity

Especially for relatively simple agents, using verbose flags is all that is needed to get a good understanding of what happens under the hood. Here is a slightly modified version of the agent that will produce a lot more output, including the prompts being used.

from dotenv import load_dotenv

from langchain.agents import AgentType, initialize_agent, load_tools

from langchain.callbacks import StdOutCallbackHandler

from langchain.llms import OpenAI

# Load environment variables; especially the OpenAI API key

load_dotenv()

llm = OpenAI(model_name='text-davinci-003', temperature=0, verbose=True)

tools = load_tools(['wikipedia', 'llm-math'], llm=llm)

agent = initialize_agent(tools, llm, agent=AgentType.ZERO_SHOT_REACT_DESCRIPTION, verbose=True)

agent.agent.llm_chain.verbose=True

callbacks = [StdOutCallbackHandler()]

agent.run('When did the first Eurovision Song Contest take place? What is 2023 minus this year?', callbacks=callbacks)As you can see, both the llm as well as the agent have the verbose flag set to True. Furthermore, we manually set the llm_chain to be verbose and added a callback to the run method.

The callback is important, as it allows us to “hook into the various stages of your LLM application” (LangChain) at a much deeper level.

Verbose LangChain Agent Output

Verbose LangChain Agent Output

Without resorting to much more complicated approaches, this is as verbose as LangChain currently gets.

Now we can see, for example, the following output, which includes the “prompt after formatting,” i.e., the populated prompt template.

> Entering new LLMChain chain...

Prompt after formatting:

Answer the following questions as best you can. You have access to the following tools:

Wikipedia: A wrapper around Wikipedia. Useful for when you need to answer general questions about people, places, companies, facts, historical events, or other subjects. Input should be a search query.

Calculator: Useful for when you need to answer questions about math.

Use the following format:

Question: the input question you must answer

Thought: you should always think about what to do

Action: the action to take, should be one of [Wikipedia, Calculator]

Action Input: the input to the action

Observation: the result of the action

... (this Thought/Action/Action Input/Observation can repeat N times)

Thought: I now know the final answer

Final Answer: the final answer to the original input question

Begin!

Question: When did the first Eurovision Song Contest take place? What is 2023 minus this year?

Thought:

Prompt

This is the first prompt, as it is being sent to the LLM. As we can see, the available tools and the question have been injected into the prompt template.

In many cases, this level of verbosity is absolutely enough in order to understand what is going on. That said, the output could be easier to read, and with more complex agents, tracking the prompts this way is rather tedious.

Insights Using Weights & Biases

Of course, this is where Weights & Biases is finally coming into play!

With a few modifications to our code, we can log everything our agent is doing to W&B for further analysis. As mentioned above, we can leverage the existing WandbCallbackHandler to quickly integrate LangChain and W&B.

from datetime import datetime

from dotenv import load_dotenv

from langchain.agents import AgentType, initialize_agent, load_tools

from langchain.callbacks import StdOutCallbackHandler, WandbCallbackHandler

from langchain.llms import OpenAI

# Load environment variables; especially the OpenAI API key

load_dotenv()

# Weights & Biases

session_group = datetime.now().strftime("%m.%d.%Y_%H.%M.%S")

wandb_callback = WandbCallbackHandler(

job_type="inference",

project="LangChain Demo",

group=f"minimal_{session_group}",

name="llm",

tags=["demo"],

)

callbacks = [StdOutCallbackHandler(), wandb_callback]

llm = OpenAI(model_name='text-davinci-003', temperature=0)

tools = load_tools(['wikipedia', 'llm-math'], llm=llm)

agent = initialize_agent(tools, llm, agent=AgentType.ZERO_SHOT_REACT_DESCRIPTION)

agent.run('When did the first Eurovision Song Contest take place? What is 2023 minus this year?', callbacks=callbacks)

wandb_callback.flush_tracker(agent, reset=False, finish=True)The key part here is to add another callback (wandb_callback), which will be used to track our agent. As you can see below, quickly after starting the agent, data becomes available on the W&B platform. When configuring the callback, we can also define the project name, tags, etc.

Weights & Biases New Data

Weights & Biases New Data

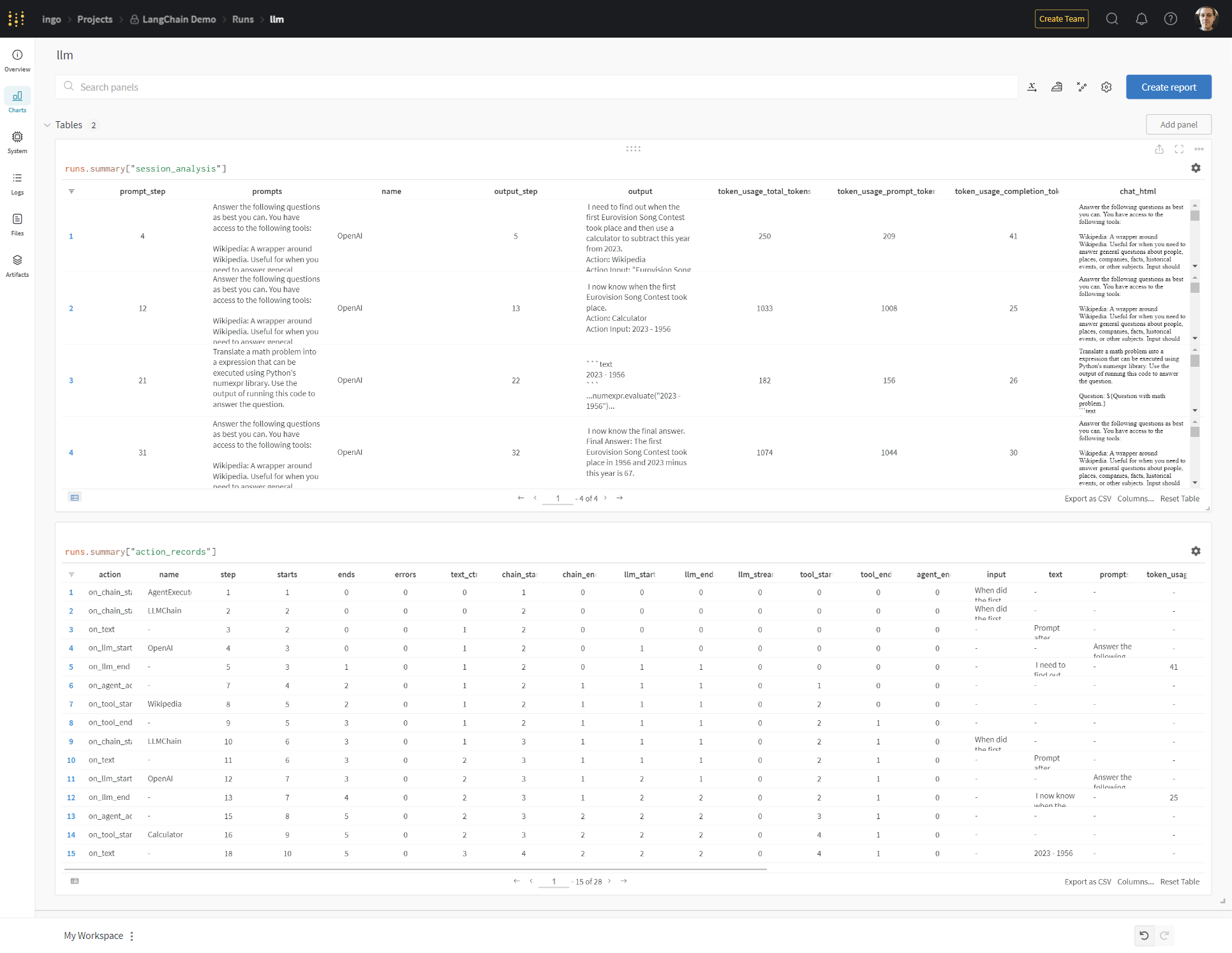

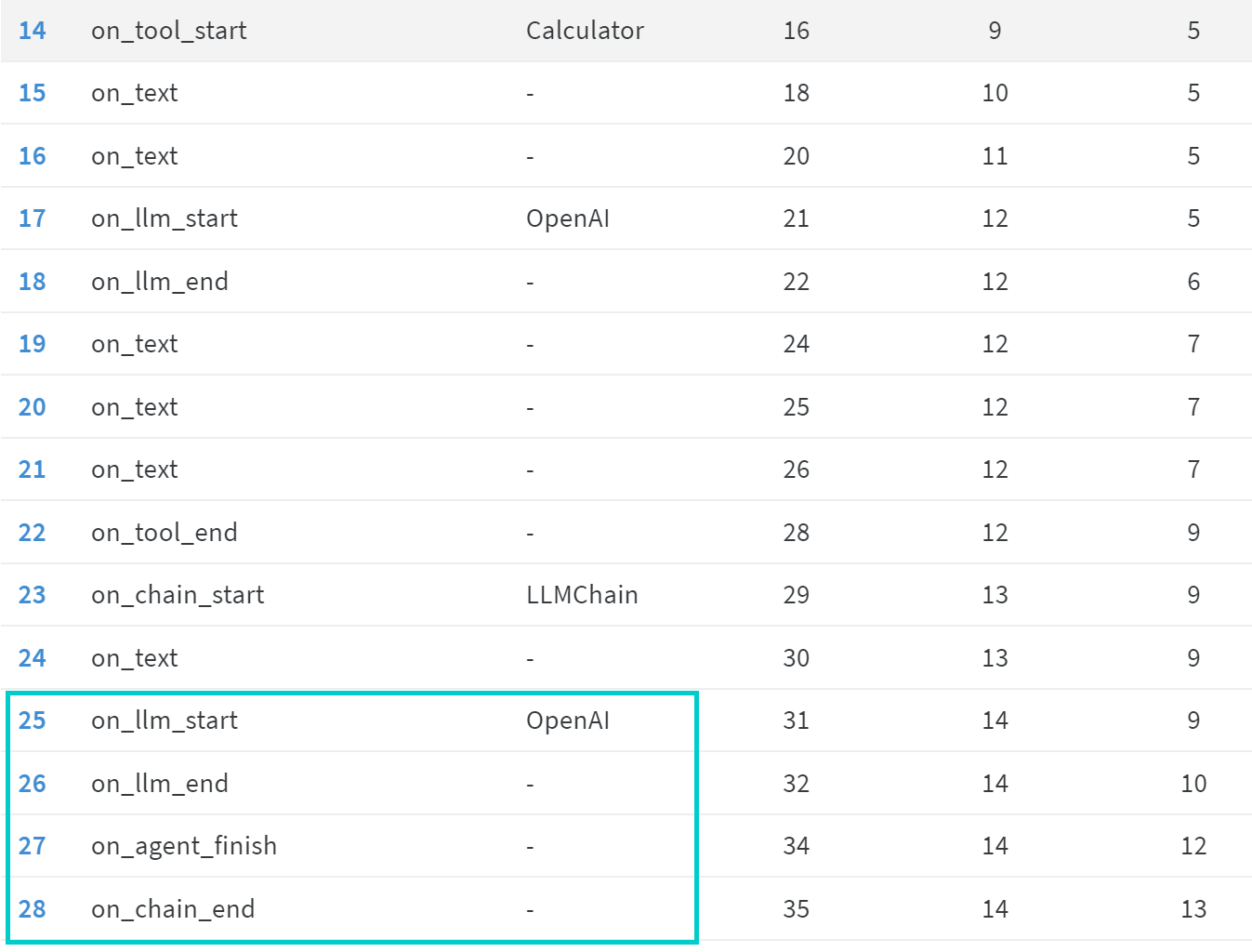

In the screenshot below, we can see “session_analysis” (our prompts) on top and the “action_records” on the bottom. Using these tables, we can see exactly what happened during the execution of our agent.

We can also see how W&B sorts everything by “steps.” So, for example, for each prompt, we can see a “prompt_step” and an “output_step,” which match the steps in the “action_records.”

Weights & Biases Analysis

Weights & Biases Analysis

Having these capabilities, let us try to understand how we got to that “The first Eurovision Song Contest took place in 1956 and 2023 minus this year is 67” result step by step.

To do so, we will focus on the four prompts that have been sent to the LLM and the output we have received.

Prompt 1 – Initial Prompt and Tooling

In the first step, the LLM is prompted with the question (task) as well as the available tools and their description.

Answer the following questions as best you can. You have access to the following tools:

Wikipedia: A wrapper around Wikipedia. Useful for when you need to answer general questions about people, places, companies, facts, historical events, or other subjects. Input should be a search query.

Calculator: Useful for when you need to answer questions about math.

Use the following format:

Question: the input question you must answer

Thought: you should always think about what to do

Action: the action to take, should be one of [Wikipedia, Calculator]

Action Input: the input to the action

Observation: the result of the action

... (this Thought/Action/Action Input/Observation can repeat N times)

Thought: I now know the final answer

Final Answer: the final answer to the original input question

Begin!

Question: When did the first Eurovision Song Contest take place? What is 2023 minus this year?

Thought:

Prompt

The completion (output) reads as follows:

[Thought:] I need to find out when the first Eurovision Song Contest took place and then use a calculator to subtract this year from 2023.

Action: Wikipedia

Action Input: "Eurovision Song Contest"

Completion / Agent Output

The LLM picks wikipedia as the first tool of choice and wants to query it with “Eurovision Song Context”.

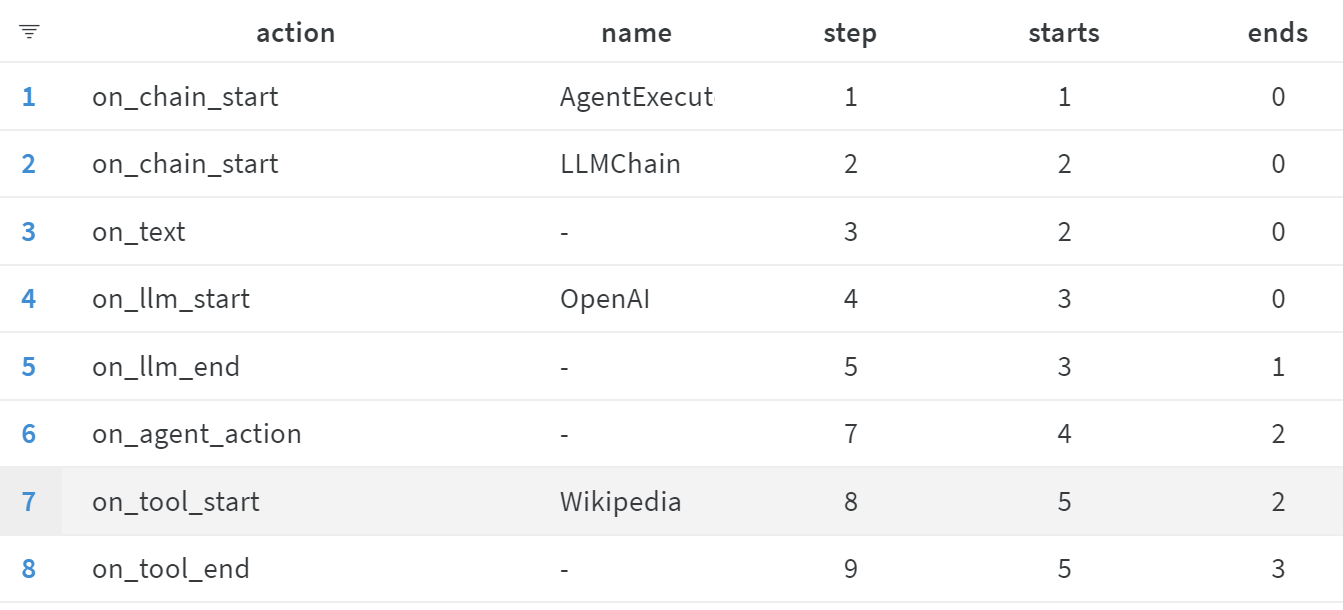

In the “action_records” we can see that following this (Step 8), wikipedia is called. Let us have a look at the next prompt.

Weights & Biases Action Records

Weights & Biases Action Records

Prompt 2 – Initial Prompt and Tooling

The next prompt, as we can see below, contains the Wikipedia page (shortened below) as retrieved by the tool. This provides the LLM with the outside information it needs to progress.

Answer the following questions as best you can. You have access to the following tools:

Wikipedia: A wrapper around Wikipedia. Useful for when you need to answer general questions about people, places, companies, facts, historical events, or other subjects. Input should be a search query.

Calculator: Useful for when you need to answer questions about math.

Use the following format:

Question: the input question you must answer

Thought: you should always think about what to do

Action: the action to take, should be one of [Wikipedia, Calculator]

Action Input: the input to the action

Observation: the result of the action

... (this Thought/Action/Action Input/Observation can repeat N times)

Thought: I now know the final answer

Final Answer: the final answer to the original input question

Begin!

Question: When did the first Eurovision Song Contest take place? What is 2023 minus this year?

Thought: I need to find out when the first Eurovision Song Contest took place and then use a calculator to subtract this year from 2023.

Action: Wikipedia

Action Input: "Eurovision Song Contest"

Observation: Page: Eurovision Song Contest

Summary: The Eurovision Song Contest (French: Concours Eurovision de la chanson), often known simply as Eurovision, is an international song competition [...]

Thought:

Prompt

The LLM acknowledges this newfound knowledge and determines the following step:

I now know when the first Eurovision Song Contest took place.

Action: Calculator

Action Input: 2023 - 1956

Completion / Agent Output

The first half of the task has been successfully finished! Now we need to do some calculations.

Prompt 3 – Doing Math

In this next step, the LLM needs to create a valid query for llm-math based on the previous “Action Input.” As llm-math uses numexpr.evaluate at its core, the LLM is prompted to produce a valid input. In other words: We need the LLM to produce the correct input for our Python function based on the previously generated input.

Translate a math problem into a expression that can be executed using Python's numexpr library. Use the output of running this code to answer the question.

Question: ${Question with math problem.}

```text

${single line mathematical expression that solves the problem}

```

...numexpr.evaluate(text)...

```output

${Output of running the code}

```

Answer: ${Answer}

Begin.

Question: What is 37593 * 67?

```text

37593 * 67

```

...numexpr.evaluate("37593 * 67")...

```output

2518731

```

Answer: 2518731

Question: 2023 - 1956

Prompt

As we can see, the agent is using one- or few-shot prompting, providing the LLM with an example.

This produces the following, which serves as input for the calculator (i.e., llm-math and numexpr.evaluate):

```text

2023 - 1956

```

...numexpr.evaluate("2023 - 1956")...

Completion / Agent Output

Prompt 4 – Bringing Everything Together

How having the correct input, the tool can perform the calculation. This being done, all the information is passed to the LLM again:

Answer the following questions as best you can. You have access to the following tools:

Wikipedia: A wrapper around Wikipedia. Useful for when you need to answer general questions about people, places, companies, facts, historical events, or other subjects. Input should be a search query.

Calculator: Useful for when you need to answer questions about math.

Use the following format:

Question: the input question you must answer

Thought: you should always think about what to do

Action: the action to take, should be one of [Wikipedia, Calculator]

Action Input: the input to the action

Observation: the result of the action

... (this Thought/Action/Action Input/Observation can repeat N times)

Thought: I now know the final answer

Final Answer: the final answer to the original input question

Begin!

Question: When did the first Eurovision Song Contest take place? What is 2023 minus this year?

Thought: I need to find out when the first Eurovision Song Contest took place and then use a calculator to subtract this year from 2023.

Action: Wikipedia

Action Input: "Eurovision Song Contest"

Observation: Page: Eurovision Song Contest

Summary: The Eurovision Song Contest (French: Concours Eurovision de la chanson), often known simply as Eurovision, is an international song competition [...]

Thought: I now know when the first Eurovision Song Contest took place.

Action: Calculator

Action Input: 2023 - 1956

Observation: Answer: 67

Thought:

Prompt

Having all the necessary information, the LLM can now produce the final answer:

I now know the final answer.

Final Answer: The first Eurovision Song Contest took place in 1956 and 2023 minus this year is 67.

Completion / Agent Output

Looking at our W&B “action_records,” we can see that these are the last steps in the execution.

Weights & Biases Action Records

Weights & Biases Action Records

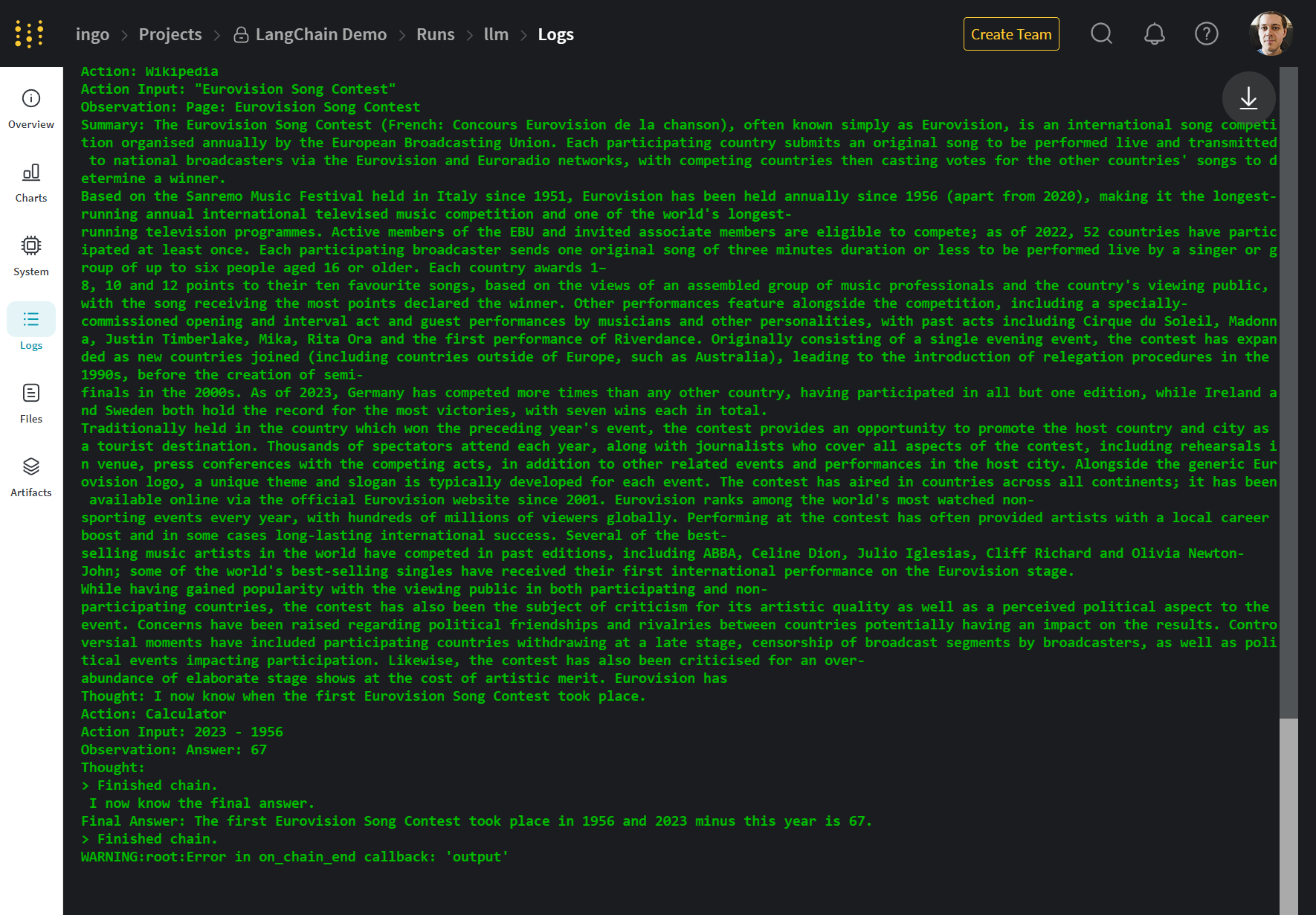

Knowing that W&B also keeps track of the regular log is also helpful. This allows us to go through the whole execution as it has been logged via standard output at any point in time.

Weights & Biases Log (Standard Out)

Weights & Biases Log (Standard Out)

As mentioned above, all of the information is also stored locally in the wandb folder.

Conclusion

(Autonomous) agents are one of the most exciting developments in the generative AI space right now! They have the potential to make LLMs useful for performing many tasks that require access to the “world.”

That said, often, they are seen as almost magic black boxes that cannot be explained or understood. However, under the hood, these are merely systems that cleverly use prompt engineering and powerful LLMs to tie various tools and interfaces together.

Being able to investigate the prompts that are being executed allows us to not only understand these systems better but also to optimize and debug them more efficiently.

However, probably more importantly, looking under the hood allows us to critically examine how these agents operate and to question, for example, how they interact with the world. This is crucial, especially looking at questions regarding safety, trust, and agency.

Thank you for visiting!

I hope, you are enjoying the article! I'd love to get in touch! 😀

Follow me on LinkedIn