LLMs as Drop-in Replacements for Traditional Models – Performing Sentiment Analysis Using GPT-3.5

Recently, I have been thinking and talking a lot about Large Language Models, (generative) AI, and practical use cases in a variety of domains.

When I had a look at the fantastic ChatGPT Prompt Engineering for Developers course by Isa Fulford and Andrew Ng, I realized that there is a specific use case for LLMs that is, at least in my bubble, still very much underrepresented.

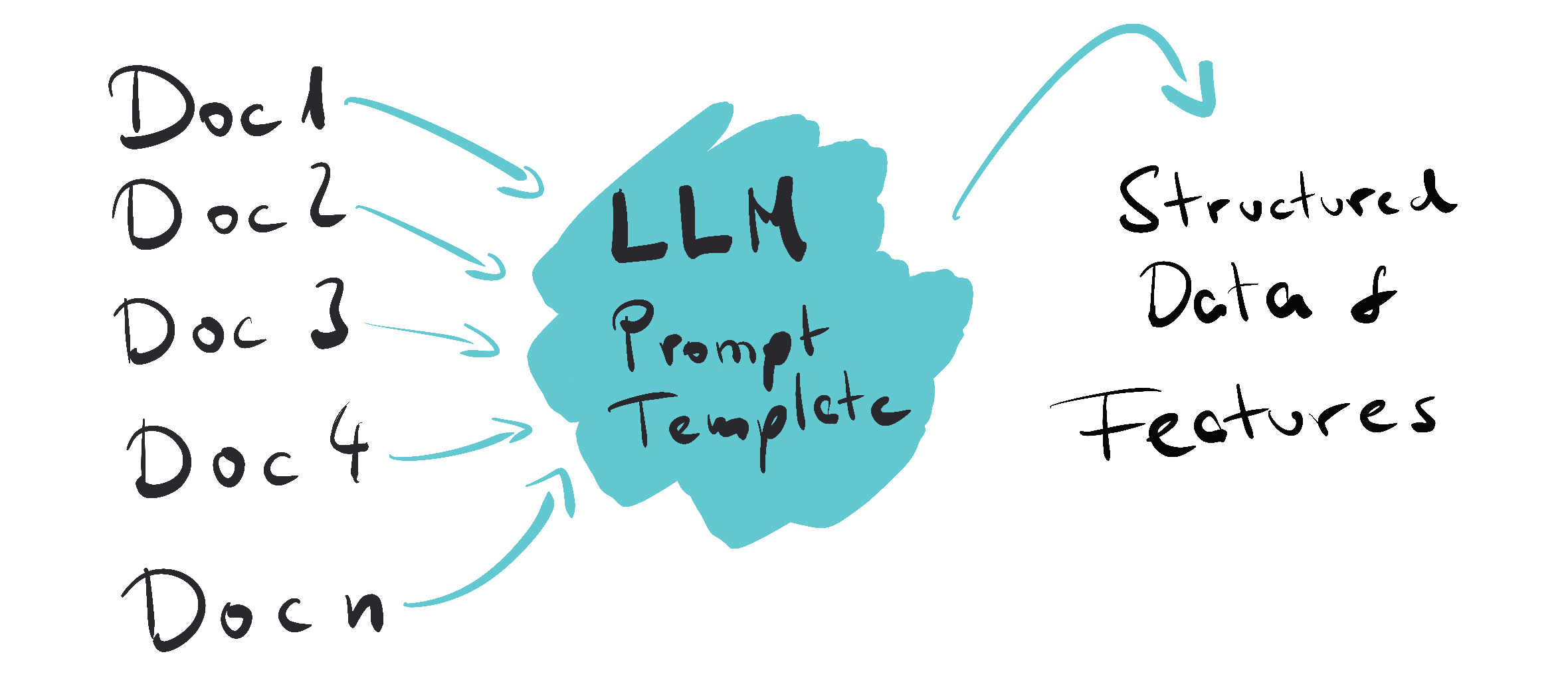

LLMs can be used as drop-in replacements for traditional models and approaches when it comes to turning documents (text) into structured data and/or to extracting features!

Of course, this makes perfect sense, given that LLMs are fantastic at inferring information from text. However, generative models are often reduced to their (creative) generative capabilities, especially when talking to non-developers. While their generative (in the sense of creating original content, not just completing prompts) capabilities are impressive, these models (also) shine when used, for example, as part of a more regular data pipeline.

Hence, in this very short article, I want to demonstrate how an LLM – here we are using OpenAI’s gpt-3.5-turbo – can be used to perform feature extraction. More precisely, we are going to use the model for sentiment analysis.

Sentiment Analysis using GPT-3.5

In the following example, we will be working with a small sample of the “Large Movie Review Dataset” by Maas et al. (2011) (kaggle Version).

This dataset contains a series of IMDB movie reviews and their sentiment (positive, negative).

We will leverage GPT-3.5’s exceptional zero-shot capabilities in order to classify the sentiment of these reviews into either positive or negative. The point of this example is that we can use the LLM as a drop-in replacement for specialized sentiment analysis models.

While we could have a long discussion about sentiment analysis from a linguistic point of view, here it merely serves as an example for extracting a feature from unstructured text. We could have as easily looked at, for example, Named Entity Recognition or any other common NLP task.

Below, you will find the complete code for this example. While we could use a more sophisticated approach using, for example, LangChain, I opted for the simplest solution.

import os

import openai

import pandas as pd

from dotenv import find_dotenv, load_dotenv

from sklearn.metrics import (accuracy_score, classification_report,

confusion_matrix, jaccard_score)

load_dotenv(find_dotenv())

openai.api_key = os.getenv('OPENAI_API_KEY')

def completion(prompt, model='gpt-3.5-turbo', temperature=0):

messages = [{'role': 'user', 'content': prompt}]

response = openai.ChatCompletion.create(

model=model,

messages=messages,

temperature=temperature,

)

return response.choices[0].message['content']

reviews_df = pd.read_csv('IMDB Dataset.csv')

sample = reviews_df.sample(100)

true = []

llm_pred = []

for index, row in sample.iterrows():

true.append(row['sentiment'])

prompt = f'What is the sentiment of the following review, which is delimited with triple backticks: ```{row["review"]}```. Output either "negative" or "positive" and no other characters.'

llm_pred.append(completion(prompt))

true = [1 if x == 'positive' else 0 for x in true]

llm_pred = [1 if x == 'positive' else 0 for x in llm_pred]

print(f'Accuracy: {accuracy_score(true, llm_pred)}')

print(f'Jaccard Score: {jaccard_score(true, llm_pred)}')

print(f'Confusion Matrix:\n{confusion_matrix(true, llm_pred)}')

print(f'Classification Report:\n{classification_report(true, llm_pred)}')

Instead of using a traditional sentiment analysis model, we are using the following prompt template and gpt-3.5-turbo.

What is the sentiment of the following review, which is delimited with triple backticks: ```{review_text}```. Output either "negative" or "positive" and no other characters.

Essentially, we are showing the model the review, and we are asking it to respond with either “positive” or “negative.” The primary difference to traditional models is that we are prompting our feature extraction process using natural language.

We are not bound to the one format. Hence, for example, if we needed a sentiment score, we could change the prompt to something like:

What is the sentiment of the following review, which is delimited with triple backticks: ```{row["review"]}```. Score the sentiment on a scale from 0 to 5 where 5 is the most positive. Only output the sentiment score.

This would, for five reviews, output something like:

['0', '3', '4', '2', '1']

Of course, this only makes sense if the results are accurate. There are at least two dimensions to this question. Firstly, the output of the LLM is (relatively) hard to control. The above prompts will almost always produce the desired output (e.g., only “positive” or “negative”), but the outcome is still probabilistic. Without any further processing, we are not guaranteed that we will only receive the two possible labels.

Secondly, we need to ensure that the LLM can perform the task with decent accuracy.

Given this example, I tested the LLM approach on 100 reviews. The scores, which you can see below, are not mindblowing but quite decent. The LLM was clearly able to identify sentiment and label it correctly. For the 100 examples and multiple runs, gpt-3.5-turbo never failed to produce the desired output.

Accuracy: 0.95

Jaccard Score: 0.8936170212765957

Confusion Matrix:

[[53 4]

[ 1 42]]

Classification Report:

precision recall f1-score support

0 0.98 0.93 0.95 57

1 0.91 0.98 0.94 43

accuracy 0.95 100

macro avg 0.95 0.95 0.95 100

weighted avg 0.95 0.95 0.95 100

However, the specific scores are not the point of this short article!

We could certainly tune the prompt and work on our data to make the scores even better. Also, highly specialized models will likely outperform the LLM in many cases.

What is important is the fact that LLMs can be used as very flexible and highly capable modules in a data pipeline. They can be used to turn unstructured text into structured data and to extract features on the fly!

Conclusion and Caveats

State-of-the-art LLMs and Chatbots such as ChatGPT and Bard are celebrated (and sometimes feared) for having the capabilities to create new text, images, etc. However, they are also a giant leap forward regarding more “boring” tasks: They work wonders in the context of data science and can be deployed in various applications!

Obviously, this is not news! For example, GPT-3 has been used in many different applications since day one. Nevertheless, I think it is essential to keep in mind the different use cases for this technology – ranging from using the LLM as a simple information extraction tool to co-creating with AI. As the example above has shown, LLMs allow us to, for example, rapidly extract new features and work with ever larger sets of unstructured data.

That said, there are at least three caveats that we cannot ignore:

Firstly, especially with large and commercial models, we have less control over the data these models have been trained on – it might be easier to trust a specialized and established model.

Secondly, there is the issue of privacy: In the example above, every sample is sent to OpenAI.

Lastly, we have to consider the cost of using these models. When using commercial models such as gpt-3.5-turbo or gpt-4, we are adding another layer of cost (also environmentally) for inference to our project. Depending on the model used and the amount of data, this can become a problem rather quickly.

Of course, most of these issues can be solved or at least mitigated by relying on open models that we can host and run ourselves.

Thank you for visiting!

I hope, you are enjoying the article! I'd love to get in touch! 😀

Follow me on LinkedIn